François-Xavier Vialard

Enseignant-chercheur au Laboratoire d’informatique Gaspard Monge.

Interests

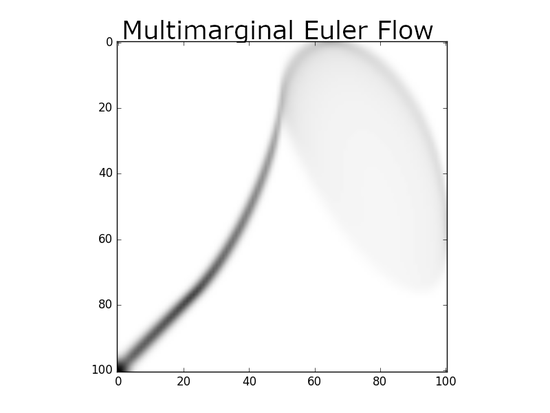

- Optimal transport; applications and numerics.

- Diffeomorphic flows; machine learning and medical image registration applications.

- Calculus of variations and geometry; applications to shape spaces and fluid flows.

- Applied and computational mathematics.