Sinkhorn algorithm (also called IPFP for Iterative Proportional Fitting Procedure) is an alternating optimisation algorithm which has gained a lot of attention in the last 10 years, when it was popularised by Marco Cuturi for approximation of optimal transport with applications in machine learning. Instead of looking at the standard optimal transport problem, we consider the more general linear programming problem in the form, $x$ is the optimisation variable, $$ \min_x \langle c, x \rangle $$ under the constraint $Ax = b$ and $x \geq 0$ (entry-wise inequality) where $c,b$ are given vectors, and $A$ is a matrix. This minimization problem can be approximated by adding an (entropic) penalty on $x$, namely the Kullback-Leibler divergence or relative entropy between the variable $x$ and a chosen vector $y >0$ (could be a vector of ones), $$ KL(x;y) = \sum_i x_i\log(x_i/y_i) -x_i+y_i$$ so our regularised optimisation problem becomes, for $\varepsilon >0$ a small parameter, $$P = \min_x \langle c, x \rangle + \varepsilon KL(x;y)$$ under the constraint $Ax = b$. Note that the constraint of nonnegativity on $x_i$ has been removed since the set of $x \geq 0$ is the domain of definition of the entropy. In fact the value of $x\log(x)$ is $0$ at $x = 0$ but its derivative is infinite, so a minimiser of the « entropic » objective function satisfies necessarily $x_i >0$. Using first-order optimality condition, one gets that the unique optimiser (here by strict convexity of the entropy) satisfies $$ x = ye^{\frac 1 \varepsilon (A^\top p - c)}$$ where $A^\top$ is the transpose of $A$ for an unknown vector $p$ (the multiplication is to be understood as coordinate-wise multiplication). The regularized optimisation problem is convex and its dual reads $$D =\sup_p \langle p, b\rangle - \varepsilon \sum_i y_i KL^\star(\frac 1\varepsilon [A^\top p - c]_i)\,.$$ Here $KL^\star = e^{x} - 1$ is the Fenchel-Legrendre transform of $x\log(x) - x$.

This problem is now a smooth unconstrained concave maximisation problem and standard optimisation methods can be used.

However, in some particular instances such as optimal transport, which has a particular structure for the matrix $A$, one can define cheaper iterations. In this case, the primal problem reads, for a matrix variable $\pi$ and two vectors (the measures) $b_1,b_2$ which are nonnegative (entry-wise) vectors and sum up to $1$, $$P = \min_\pi \langle c, \pi \rangle + \varepsilon KL(\pi; b_1 \otimes b_2)$$ under the constraints $ \sum_i \pi(i,j) = b_2(j)$, $ \sum_j \pi(i,j) = b_1(i)$ and the entry-wise positivity of $\pi(i,j) \geq 0$.

Its dual problem naturally decomposes the dual variable into two vectors $(p_1(i),p_2(j))$ that account for the two marginal constraints. It reads (up to an additive constant) $$\sup_{p_1,p_2} \langle p_1, b_1\rangle + \langle p_2, b_2\rangle - \varepsilon \sum_i b_1(i)b_2(j) e^{(\frac 1\varepsilon [p_1(i) + p_2(j) - c(i,j)])}\,.$$ Alternate maximisation is cheap in this case due to the explicit form of the maximizers; Fix $p_2$, then the maximizer over $p_1$ is given by $$ p_1(i)= -\varepsilon \log(\sum_i e^{\frac{1}{\varepsilon}(p_2(j) - c(i,j))}b_2(j))$$ and similarly for $p_2$. The Sinkhorn algorithm consists in alternating this optimization on $(p_1,p_2)$. This algorithm is proved to be linearly convergent with a rate that degrades exponentially with $\varepsilon$ and in the low epsilon regime, it still enjoys standard convex optimization rates.

There is by now a huge litterature on the Sinkhorn algorithm and it is not the purpose of this post to present it and rather, we want to present variants or generalisations of the Sinkhorn algorithm which define cheap iterations and are still efficient for optimization the dual or primal cost. Note that Sinkhorn algorithm on the general problem above, that is alternate maximization, on the dual problem can be applied but it requires the solution of a concave maximization problem for each chosen variable. The Sinkhorn algorithm finds its interest in the computationally cheap iterations it defines.

For generalizations of optimal transport such as

- Martingale optimal transport, [Margheriti].

- Weak optimal transport, [Lindheim, 2023].

- Moment constrained optimal transport, [Sturmfels et al., 2022].

the decomposition of the dual problem into blocks of dual variables naturally appears; There might be other OT generalizations in which the GIS could be applied and studied. However, the alternate maximization is sometimes not explicit and it is possible to resort to inner optimization method to solve it, at the risk of loosing the computational benefit of the iterations. Our paper [Sturmfels et al., 2022] for entropic regularization of the conic formulation of unbalanced optimal transport falls in the third case and instead of the Sinkhorn algorithm, we proposed to use the generalization described below. The question we now address is

What is a replacement for the Sinkhorn algorithm in the situations mentioned above and in more general situations?

An answer has been proposed in the literature under at least two different names, the MART algorithm1 introduced in the 70’s in the imaging community by Gordon, Bender and Herman (see Section 4 of [Byrne]) and the Iterative Scaling algorithm ([SheTang]) introduced in the statistical literature by Darroch and Ratcliff, also around the same time.

Although the formulation of the problem is equivalent to the above, its starting point is slightly different. It consists in optimizing $$\min_x KL(x,y)$$ under the constraint that $Ax = b$; assuming that this constraint is feasible, there exists $z$ such that $Az = b$. This is essentially equivalent to the entropic regularized problem with $\varepsilon = 1$ by choosing $ye^{-c}$ instead of $y$.

The most common setting is when $A$ is a matrix of positive entries (see [SheTang] for generalizations) and for simplicity we assume that $A^\top$ has lines which sum up to a maximum of $R$, then the proposed iterations by Darroch and Ratcliff are of the form $$ x_{k+1} = x_{k} e^{\frac 1R A^\top \log(b/Ax_{k})}\,.$$ As explained in [SheTang], this iterative scheme can be recast in a majorization-minimization setting ([Lange]) on the dual problem which we now explain. The dual problem (with a minus sign to turn it convex) reads again $$D(p) = -\sum_{j} p(j) b(j) + \sum_{i} y(i) e^{ \sum_{j} \tilde a(i,j)p(j)}\,,$$ where $\tilde a(i,j)$ denotes the coefficient of $A^\top$. Let me just discuss the case when all the lines of $A^\top$ sum up to $1$. To obtain a majorizing function, one can apply Jensen inequality to the exponential function to get ($k$ denoting the iteration number) $$ D(p_{k} + \delta ) \leq D(p_{k}) - \sum_{j} \delta(j) b(j) + \sum_{i,j}y(i) \tilde a(i,j) e^{ \sum_{j} \tilde a(i,j)p_k(j)} e^{ \delta(j)}$$ which is a separable function in $\delta(j)$. The optimization on $\delta(j)$ is explicit and is given by $$ \delta(j) = \log(b(j) / Ax_{k})$$ where $ x_{k} = y e^{A^\top p_{k}}\,,$ so one defines $$ p_{k+1} = p_{k} + \log(b / Ax_{k})\,$$ which implies the iterates proposed above on the primal variable $$ x_{k+1} = x_{k}e^{A^\top \log(b/Ax_{k})}\,.$$ Note that this maximization-minimization framework can be applied on each variable $\delta(j)$ so that one can choose to update all at once, or sequentially, or mixing these strategies in different ways such as:

blocking strategies which update some groups of variables.

Randomization of the chosen blocks.

These two strategies are proven to be useful and we refer to [SheTang] for a detailed discussion and many variations of these kind of algorithms2.

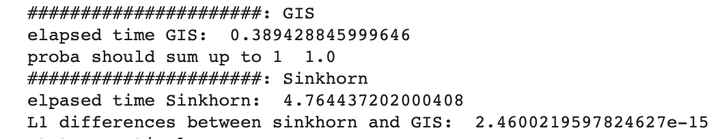

In conclusion, coordinate optimization on the dual variables in entropic optimal transport is sometimes not explicit, however, majorization-minimization approaches on these steps can be attractive due to the cheap cost of the iterations, particularly for large scale settings. Importantly, this generalized iterative scaling algorithm goes way beyond OT related problems since it applies to entropic regularization of every Linear Programming problem which has nonnegativity constraint and a matrix $A$ with nonnegative coefficients.

References.

[SheTang] Yiyuan She and Shao Tang, Iterative Proportional Scaling Revisited: A Modern Optimization Perspective (Journal of Computational and Graphical Statistics, 2018).

[Byrne] Charles Byrne, AUXILIARY-FUNCTION MINIMIZATION ALGORITHMS (Applied Analysis and Optimization, 2018).

[Margheriti] William Margheriti, Sur la stabilité du problème de transport optimal martingale (PhD thesis (in french), 2020). https://hal.science/tel-03133128v2/document

[Sturmfels et al., 2022] Bernd Sturmfels, Simon Telen, François-Xavier Vialard, Max von Renesse, Toric geometry of entropic Regularization (Journal of Symbolic Computation, 2022).

[Lange] Kenneth Lange, MM OPtimization algorithms, SIAM 2016.

[Lindheim, 2023] J. von Lindheim, G. Steidl, Generalized Iterative Scaling for Regularized Optimal Transport with Affine Constraints: Application Examples (ArXiv, 2023).