In a project with a student, I recently derived a non-local diffusion PDE which turns out, surprisingly for me, to have a name: Stein variational descent. Let me explain the way I derived it, which has a starting point conceptually different from the current literature. This point of view stems from shape analysis, well developed in the past fifteen years and which has been put forward by the pioneering work of Ulf Grenander [1]. A key notion is the notion of group action on a collection of objects of interest.

Group action and Riemannian metrics.

Let me consider the abstract situation in which one is given a (Lie) group $G$ and a left action of it on a manifold $M$ (at least to compute derivatives). A natural construction to obtain a Riemannian metric on $G$ which is “compatible” with a metric on its orbits consists in using a scalar product $\langle \cdot,\cdot\rangle$ on the Lie algebra of $G$, say $T_{Id}G $ and pushing it onto $G$ and $M$.

Right-invariant metric on $G$.

First, I detail how to push it onto $G$ by using right-translations: The map $R_h: G \mapsto G$ defined by $R_h(g) = gh$ is the right-translation by $h \in G$. Denoting $\delta g \cdot h = DR_h(g)(\delta g)$ (that is right-translating the tangent vector $\delta g$ at point $g$), one can define the right-invariant metric on the group $G$ by $$ g_G(h)(\delta h,\delta h) := | \delta h \cdot h^{-1} |^2\,. $$ Such a right-invariant Riemannian metric on group of diffeomorphisms have been put forward by V. Arnold in the late sixties to study the incompressible Euler equation as a geodesic flow [2]. In this case, V. Arnold proves (at least formally) that the incompressible Euler equation are geodesics for the right-invariant metric which uses the $L^2$ norm (with respect to the Lebesgue measure) on the space of vector fields, which is the tangent space at identity of the group of diffeomorphisms. This point of view enabled the breakthroughs by Ebin, Marsden (short time existence of the incompressible Euler flow in any dimension on a closed manifold) and Brenier (every smooth Euler flow is length minimizing for short times). Considering flows of vector fields has also been extensively used in pattern theory and more precisely computational anatomy, in which the problem is to find a diffemorphism of the whole space to transform one object into another one, typically a medical image of an organ. Here, the term object can be understood in a broad sense, but it requires to support a transformation by a diffeomorphism. Examples are surfaces, functions, differential forms, probability measures, currents… The important case for our discussion is the case of probability measures, and in particular densities (smooth positive functions which are integrable), for which Moser’s theorem says that the action of the group of diffeomorphisms is transitive (i.e. between two any densities, there exists a diffeomorphism that pushforwards one onto the other).

Induced metric on $M$.

We now come back to the abstract situation of the group action $G \times M \to M$. Now, if the action of $G$ on $M$ is a submersion (i.e. surjective as well as its differential), one can define the following Riemannian metric on $M$ by

$$ g_M(q)(v,v) := \inf_{\xi \in T_{Id}G} \{ | \xi |^2 \, ; \, \xi \cdot q = v \}\,. $$ In order to make clear the formula above, I need to introduce the infinitesimal action $$\xi\cdot q := \frac{d}{dt}_{t=0} g(t) \cdot q$$ for any curve $g(t)$ such that $g(0) = Id$ and $g’(0) = \xi$. Now, this metric on $M$ is “compatible” with the one on $G$ in the following mathematical sense, for a given $q_0 \in M$, the map $g \to g.q_0$ is a Riemannian submersion from $G$ to $M$. Riemannian submersion is the extension of orthogonal projection to the non-linear setting. One interest of this Riemannian geometry structure is to have O’Neill’s formula for the curvature of $M$ in terms of the curvature of $G$. This formula shows that there is more curvature on $M$ than on $G$. Another interest of this structure is that every geodesic on $M$ has a “natural” notion of pre-image, called horizontal lift.

This general setup can be instantiated to a group of diffeomorphisms with a right-invariant metric specified by a Reproducing kernel Hilbert space (RKHS). RKHS are Hilbert spaces of maps for which the pointwise evaluation is continuous. Standard functional spaces such as Sobolev spaces, for a high enough order, are particular examples. An RKHS can also be defined via its kernels. The Gaussian kernel is often used, and Matern kernels correspond to Sobolev spaces (for high-order enough). The scalar product on the Lie algebra being defined, it induces a Riemannian metric on the space of densities. In this case, the infinitesimal action is $v \cdot \rho = -\operatorname{div}(\rho v)$ where $\rho$ is the density and $v$ is a vector field. Now, it’s possible to write the geodesic equations for this metric $$ \partial_t \rho + \operatorname{div}(\rho v) = 0 $$ $$\partial_t \psi + \langle \nabla \psi, v \rangle = 0$$ $$v + K \star (\rho \nabla \psi ) = 0,$$ where $K$ is the kernel associated with the RKHS, $\rho$ is the probability measure and $\psi$ is a potential function. Note that the second equation is simply the advection of $\psi$ by the flow of $v$. Interestingly, these equations can be instantiated to an empirical measure $\rho = \frac 1n \sum_{i = 1}^n \delta_{x_i}.$ $$ \dot{x}_i = \sum_j K(x_i,x_j)p_j$$ $$ \dot{p}_i = - \sum_j \langle p_i , \partial_1 K(x_i,x_j)p_j\rangle $$ which are Hamiltonian equations for the Hamiltonian function $$ H(p,q) := \frac 12 \sum_{i,j} \langle p_i , K(x_i,x_j)p_j\rangle\,. $$ Even the curvature of this space of $n$ distinct points (also called landmarks) have been computed in [4]. For other actions of the group of diffemorphisms, the reader can refer to [3] where the corresponding geodesic equations are written explicitly. The infinite dimensional situation complicates the study but is also interesting in itself, see for instance D. Mumford’s blog and his work with P. Michor.

Comments.

There are many methods in medical image registration that directly use this formulation and if not, can be recast in this setting. For instance, registration methods in medical imaging such as Demons in the early 2000’s were sometimes based on greedy algorithms to find a deformation from object $A$ to object $B$, in other words, a gradient flow (gradient descent in continuous time) of an objective functional without any regularization. The so-called method of large deformation by diffeomorphism metric mapping (LDDMM) goes one step further by introducing a regularization based on the length of the path described by the flow, see [3]. In the abstract setting above, one specifies a loss term, a function of interest that has to be minimized, $F: M \mapsto \mathbb{R}$ and minimize it using gradient descent with the Riemannian structure on $M$ that comes from $G$. When looking at convergence of this gradient flow, one needs structural assumptions/properties on $F$ to guarantee positive results. For example, Polyak-Lojasiewicz (PL) condition is of particular interest on Riemannian manifold. However, proving this condition sometimes comes back to geodesic convexity, which can be hard to prove in practice. Remarkably, beautiful examples can be found for classical groups and their induced metric on a vector space in [8].

The gradient flow of Kullback-Leibler divergence w.r.t. the induced right-invariant metric.

So, coming back to the main subject, how come this metric has something to do with Stein variational descent ? In the setting of densities and the group action above, one can make a gradient flow and hope for convergence. For people used to optimal transport, it is well-known that gradient flow of the relative entropy or Kullback-Leibler (KL) divergence with respect to the Wasserstein metric leads to the Fokker-Planck equation, for which exponential convergence can sometimes be shown under some conditions on the target measure (here again geodesic convexity w.r.t. the Wasserstein distance is key). In a recent project, I computed the gradient flow of the KL divergence with a target measure $\rho_\infty$ with respect to the induced metric discused above. This simply means continuous time gradient descent on the function $\rho \mapsto KL(\rho,\rho_\infty)$ with respect to this metric on the space of densities (and let me insist for people used to optimal transport, not with respect to the Wasserstein metric). A direct calculation leads to \begin{equation} \partial_t \rho = \operatorname{div}(\rho K \star ((\nabla \rho) - \rho \nabla \log(\rho_\infty) )). \end{equation} Writing $\rho_{\infty} = e^{-V}$, it gives $$ \partial_t \rho= \operatorname{div}(\rho \nabla K \star \rho + K \star \rho \nabla V) ).$$ I was surprised that this equation has drawn some recent interest in machine learning and mathematic communities. It was introduced in [5] for approximating the posterior in Bayesian methods with a collection of particles. The derivation that I mention above can be found in a few papers, see [6] and [7]. Experimentally, the vanilla method suffers from collapse to closest mode and if not, imbalanced mode representation (see the video below) and the literature improves on it via different technics.

Theoretically, the question of convergence (in a mean field regime) has only been settled recently in [6]. Indeed, although the Stein variational descent appears as a diffusion PDE, it was not covered in PDE literature before, maybe because Stein variational descent is a non-local PDE which did not appear in the physic literature (?). Convergence is not the end of the story here since, as said above, the convergence of the Wasserstein gradient flow of the KL divergence can lead to exponential convergence. This exponential convergence is for instance guaranteed by a log-Sobolev inequality $$ KL(\rho,\rho_{\infty}) \leq \lambda | \nabla_{W^2} KL(\rho,\rho_\infty)|^2_{W^2(\rho)}\,, $$ for a positive $\lambda$. Note that this inequality has to be satisfied for all $\rho$ and it is therefore a constraint on $\rho_\infty$. The formulation above is rather non-standard and we refer the reader to log-Sobolev inequality for classical formulation and many others. However, this formulation clearly shows that it is a PL type of inequality, which guarantees exponential convergence of the KL divergence to $0$. What matters here is to have candidates for the target measure $\rho_{\infty}$ which satisfy this log-Sobolev inequality. This condition has been studied extensively, for instance in Euclidean space a sufficient condition is $\nabla^2 V \geq c >0$. However, such a PL inequality in the case of the $G$-induced Riemannian metric on the space of probability measures only starts being studied and local results (i.e. near the target measure, corresponding to the Poincaré inequality) have been explored so far, see [7].

Conclusion:

To sum up the main message here, Stein variational descent is obtained as a gradient flow for the metric induced on the space of densities by the corresponding right-invariant metric on the group of diffeomorphisms. Although this connection has probably no direct practical implication, it gives a unifying point of view on similar methods in different fields.

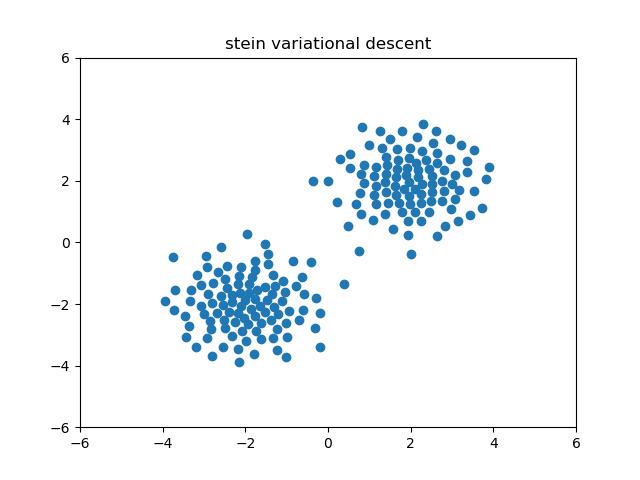

Let me finish up with a simulation of the Stein variational descent. We start from a a gaussian distribution of independent samples which is transformed via Stein variational descent towards a mixture of two gaussians of equal size. For these source and target choices, the Stein method works quite well. However, under some perturbations of the source or the target, the results are not so satisfying which is due to the non-convexity of the objective functional.

References:

[1] Grenander, U. (1994). General Pattern theory.

[2] Arnold, V. (1966), Sur la géometrie différentielle des groupes de Lie de dimension infinie et ses applications à l’hydrodynamique des fluides parfaits. Ann. Inst. Fourier.

[3] Younes, L and Arrate, F and Miller, M. (2009) Evolutions equations in computational anatomy, Neuroimage.

[4] Micheli, M., Michor , P. and Mumford, D. (2008) Sectional Curvature in Terms of the Cometric, with Applications to the Riemannian Manifolds of Landmarks, SIAM imaging sciences.

[5] Liu, Q and Wang, D. (2016) Stein variational gradient descent: A general purpose bayesian inference algorithm, NeurIPS.

[6] Lu, Y., Lu, J. and Nolen, J. (2018) SCALING LIMIT OF THE STEIN VARIATIONAL GRADIENT DESCENT: THE MEAN FIELD REGIME, SIAM.

[7] Duncan, A. , Nusken, N and Szpruch L. (2019) On the geometry of Stein variational gradient descent

[8] Burgisser, P, Franks, C. , Garg A., Oliveira, R., Walter, M. and Wigderson, A. (2019) Towards a theory of non-commutative optimization: geodesic first and second order methods for moment maps and polytopes