Unbalanced Optimal Transport, from Theory to Numerics

Abstract

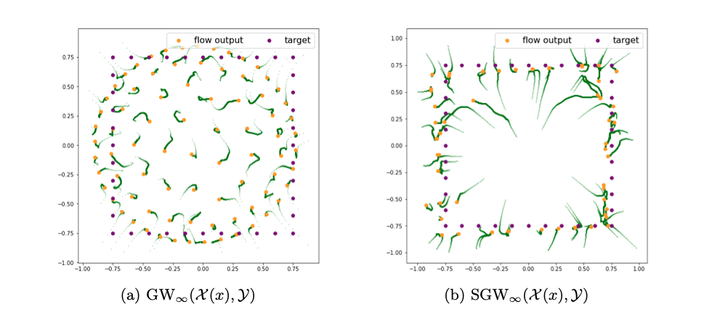

Optimal Transport (OT) has recently emerged as a central tool in data sciences to compare in a geometrically faithful way point clouds and more generally probability distributions. The wide adoption of OT into existing data analysis and machine learning pipelines is however plagued by several shortcomings. This includes its lack of robustness to outliers, its high computational costs, the need for a large number of samples in high dimension and the difficulty to handle data in distinct spaces. In this review, we detail several recently proposed approaches to mitigate these issues. We insist in particular on unbalanced OT, which compares arbitrary positive measures, not restricted to probability distributions (i.e. their total mass can vary). This generalization of OT makes it robust to outliers and missing data. The second workhorse of modern computational OT is entropic regularization, which leads to scalable algorithms while lowering the sample complexity in high dimension. The last point presented in this review is the Gromov-Wasserstein (GW) distance, which extends OT to cope with distributions belonging to different metric spaces. The main motivation for this review is to explain how unbalanced OT, entropic regularization and GW can work hand-in-hand to turn OT into efficient geometric loss functions for data sciences.