CARL: A Framework for Equivariant Image Registration

Abstract

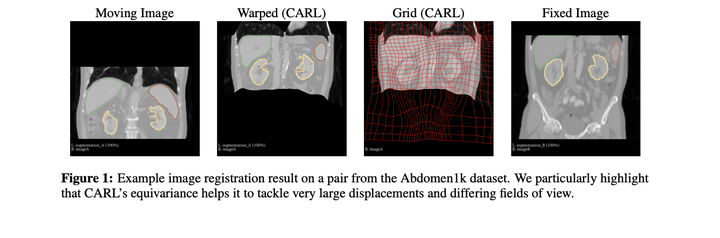

Image registration estimates spatial correspondences between a pair of images. These estimates are typically obtained via numerical optimization or regression by a deep network. A desirable property of such estimators is that a correspondence estimate (e.g., the true oracle correspondence) for an image pair is maintained under deformations of the input images. Formally, the estimator should be equivariant to a desired class of image transformations. In this work, we present careful analyses of the desired equivariance properties in the context of multi-step deep registration networks. Based on these analyses we 1) introduce the notions of [U,U] equivariance (network equivariance to the same deformations of the input images) and [W,U] equivariance (where input images can undergo different deformations); we 2) show that in a suitable multi-step registration setup it is sufficient for overall [W,U] equivariance if the first step has [W,U] equivariance and all others have [U,U] equivariance; we 3) show that common displacement-predicting networks only exhibit [U,U] equivariance to translations instead of the more powerful [W,U] equivariance; and we 4) show how to achieve multi-step [W,U] equivariance via a coordinate-attention mechanism combined with displacement-predicting refinement layers (CARL). Overall, our approach obtains excellent practical registration performance on several 3D medical image registration tasks and outperforms existing unsupervised approaches for the challenging problem of abdomen registration.